In the summer of 2019, a conversation began between BlackTrax and Plymouth State University in New Hampshire. We were preparing for a performance at the Prague Quadrennial in the Czech Republic. The show being prepped incorporated digital media in a manner sometimes referred to as “digital puppetry.” BlackTrax is a system for controlling lighting and media using infrared cameras. They were interested in what we were doing in Prague, and we worked out plans with them to do a project in our mainstage space with some of their equipment.

We began with a three-day seminar. Our students learned how to do everything their system in including the calibration of cameras and programming with the BlackTrax system to control lighting and media.

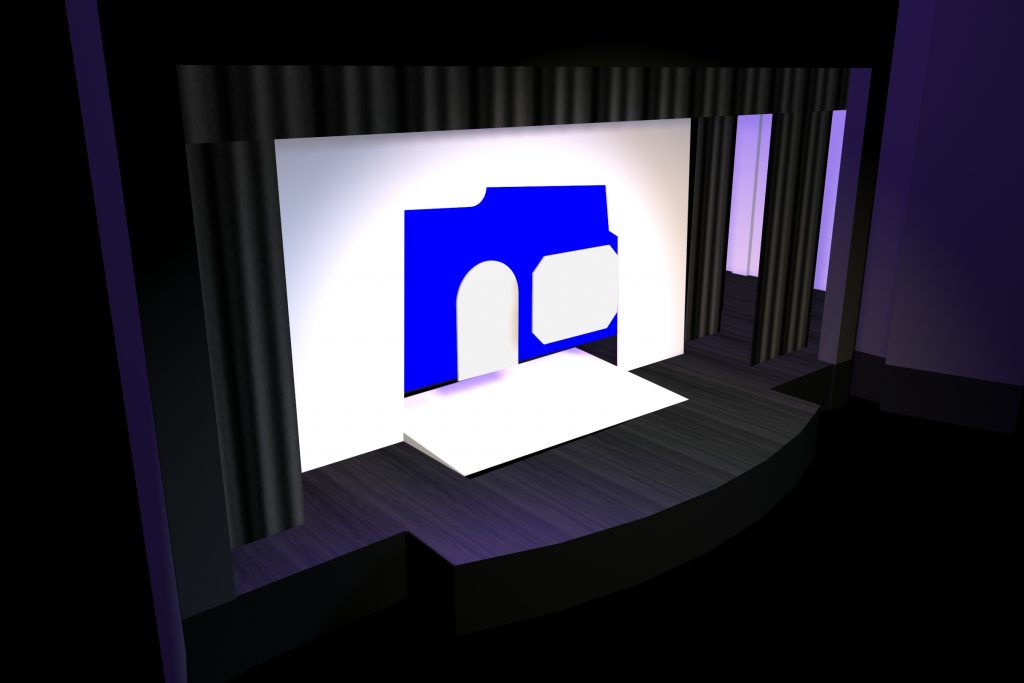

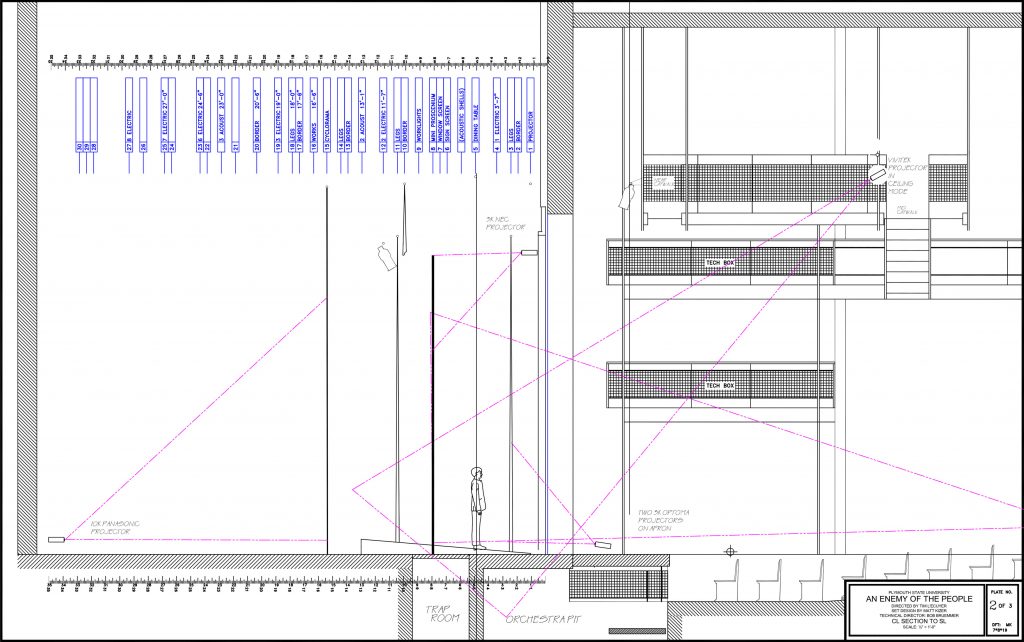

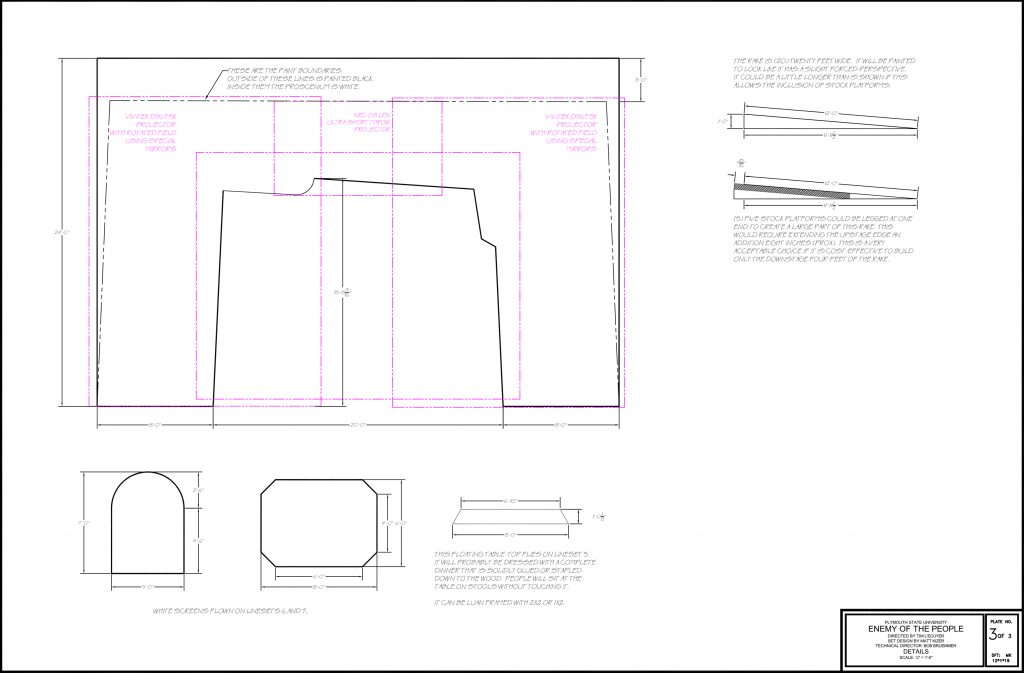

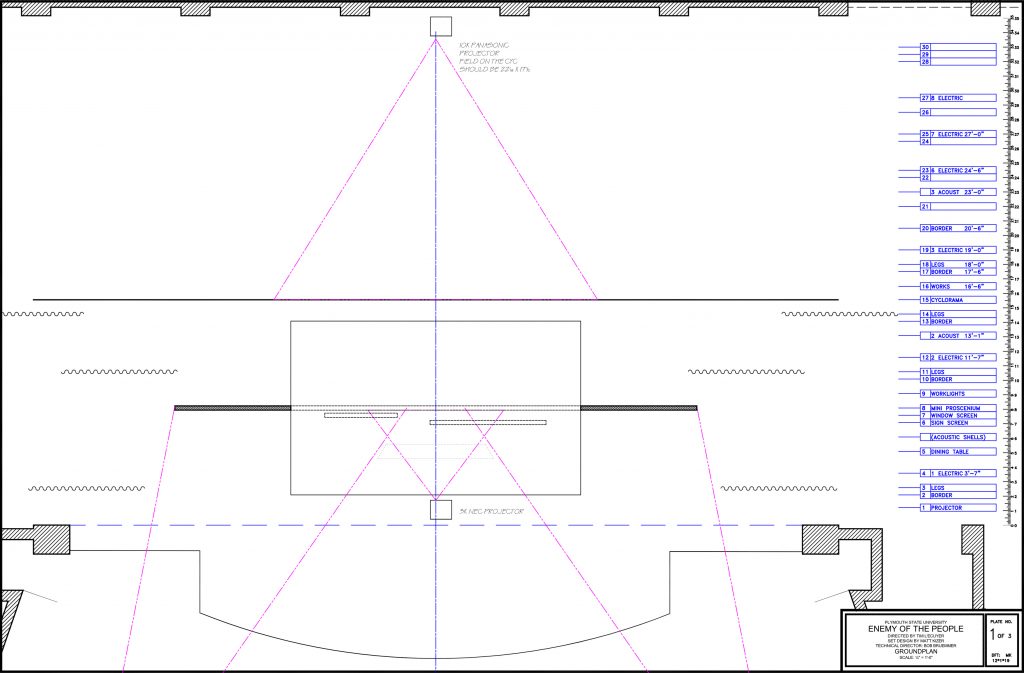

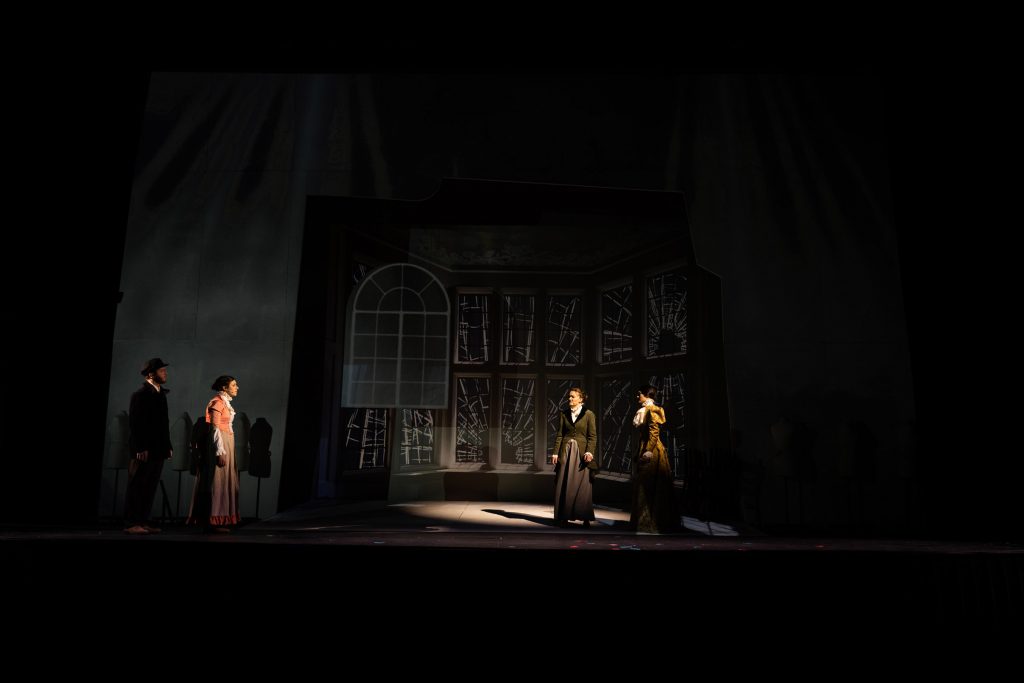

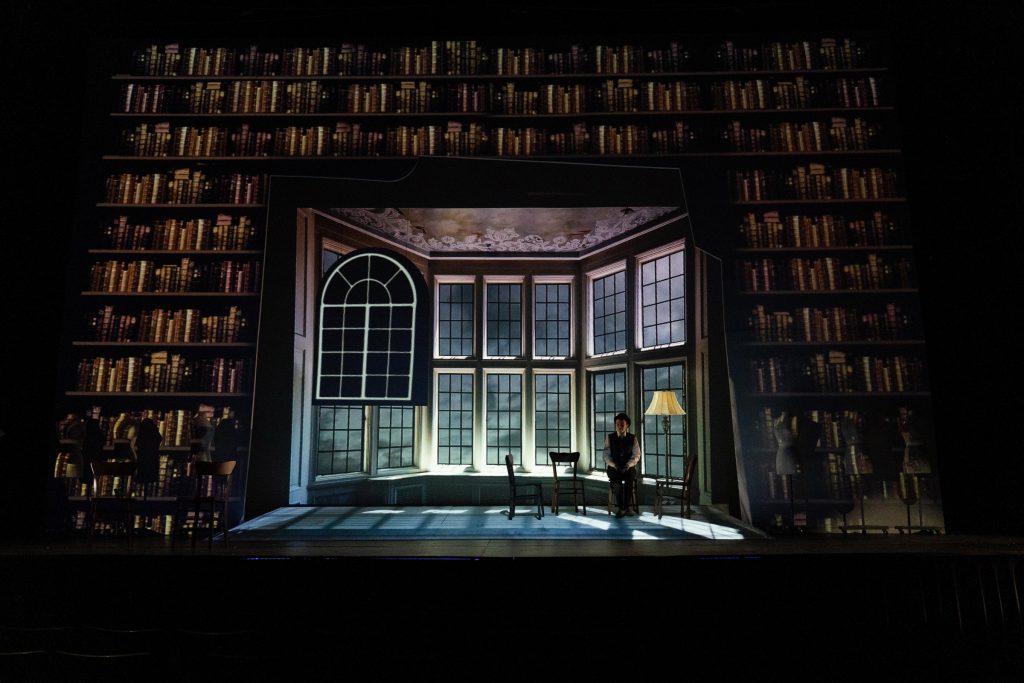

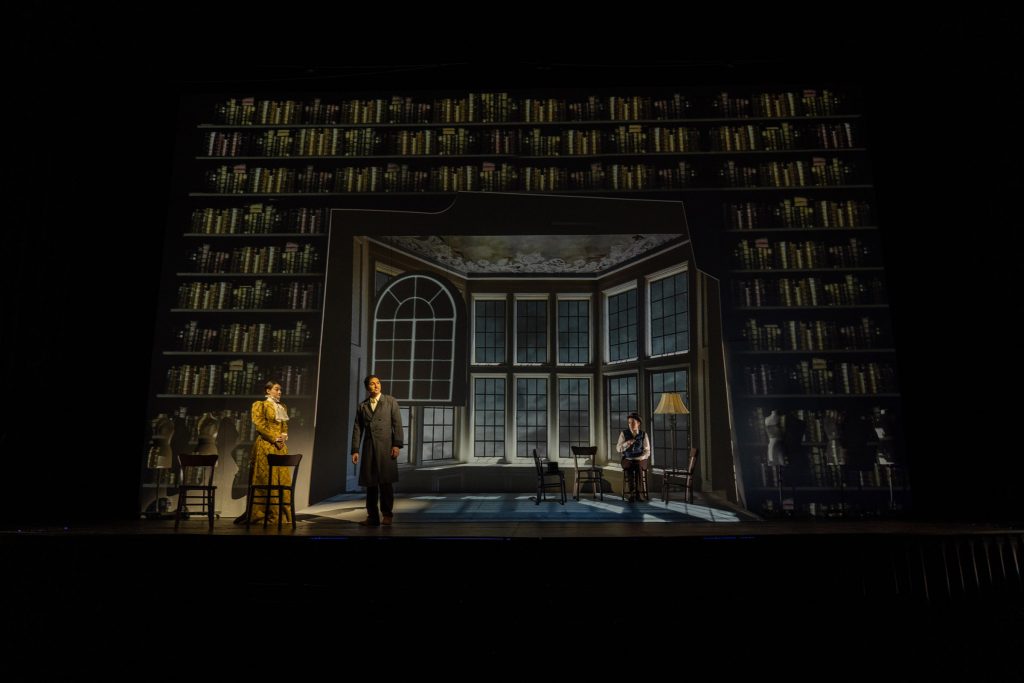

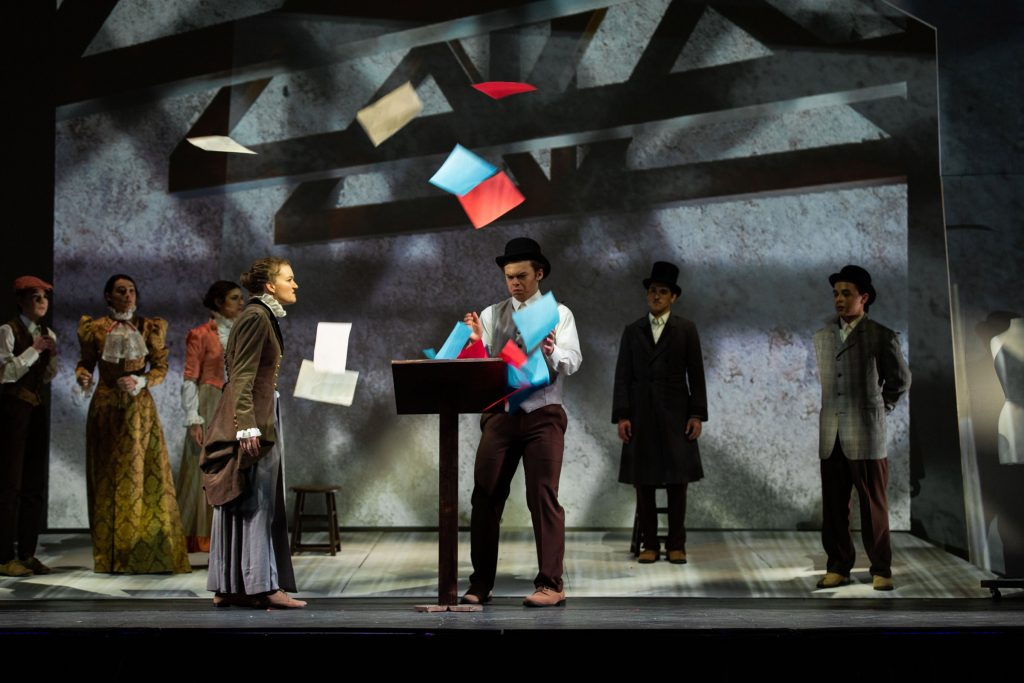

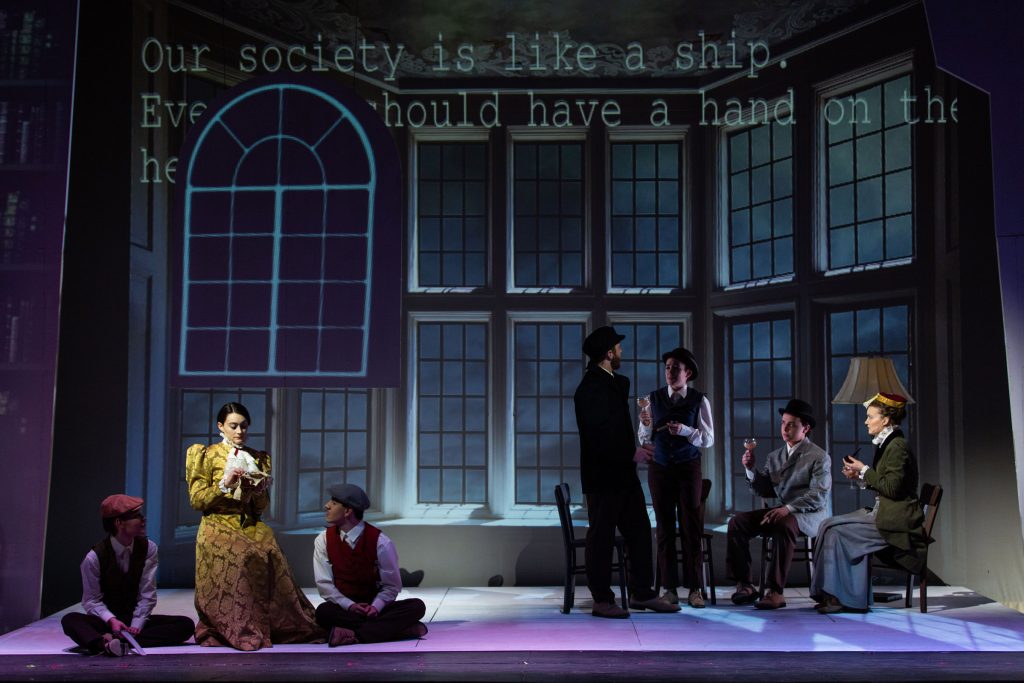

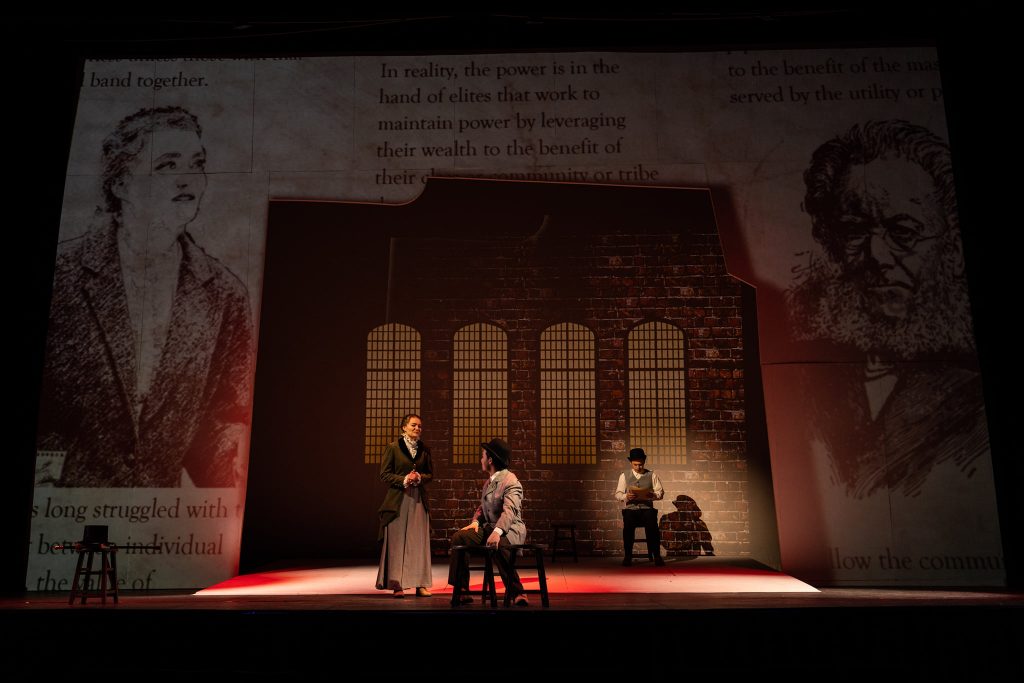

“An Enemy of the People” often gets an historical, realistic interior for a set. We had decided long before we were going in a different direction than that. The set might be what a 19th century stage designer would have fantasized for the far future. It included a period show-portal, a rake, and a backdrop as part of the physical set in a manner reminiscent of the 1880s. These were all traditional components, but each could be digitally painted and repainted throughout any scene.

This is a play about an impending pandemic disaster. The local scientists, politicians, and business owners struggle for control over popular belief. We thought this was a timely show. This opened in February of 2020, before any of us imagined in any way what was going to unfold in the world.

The simplest application of the BlackTrax technology was subtle. The acting light in the show constantly flowed to fit exactly where we needed it. Each actor had their own special that came up when they entered, and dowsed when they exited. There were few cues for this, and no need for spot ops. Isolation lighting followed every performer as they moved. It was an ideal environment for projections. The movers tracked the actors with tight, soft spots. It was like having liquid light that flowed where we needed it.

In addition to controlling the lighting, we also tied media to tracking data. Elements in the Watchout timeline responded to the location of specific performers in the space. Light sources and shadows shifted with actors. The scenery responded to the movement and shifting of the ensemble.

In some ways, it was a lot to learn in a short amount of time. We had bad weather, and lost tech time. But we kept finding the system to be liberating in unexpected ways. Once rules were established for a scene, the lighting cued itself. We relied on “French Scenes,” where cues happened when actors entered or exited. The system knew what to do, followed the rules we established, and in many ways ran itself.

We closed the show and did a partial strike. We set up for the next part of the process: student-driven projects and experimentation.

We had one week before spring break and six days with a dark stage. We added a white scrim to the space. We built expressions in Watchout that let us match media to performers or to their hands. The student groups wrote scripts based on outrageous concepts and ambitious plans. The students were planning for ghosts, magic, Pokémon powers, and The Force.

Everyone went away for Spring Break.

And then, none of them came back to campus. We all went to our homes and into our own types of quarantine.

The class took a big pivot in how it proceeded from there. We went online. The students learned to create projection media, to code, and they researched many branches of technology like this.